By visiting our site, you agree to our privacy policy regarding cookies, anonymous tracking statistics

A complete A-Z of key terms in non-seismic geophysical surveying.

An accelerometer is a tool that measures Proper Acceleration. Proper Acceleration is the acceleration (the rate of change of velocity) of a body in its own instantaneous rest frame; this is different from Coordinate Acceleration, which is acceleration in a fixed coordinate system. For example, an accelerometer at rest on the surface of the Earth will measure an acceleration due to Earth’s gravity, directly upwards (by definition) of g ≈ 9.81 m/s2. By contrast, accelerometers in free fall (falling toward the centre of the Earth at a rate of about 9.81 m/s2) will measure zero.

When two or more accelerometers are coordinated with one another, they can measure differences in gravity, over their separation in space, in other words, the gradient of the gravitational field. Gravity Gradiometry is useful because absolute gravity is a weak effect and depends on the local density of the Earth, which can be highly variable.

An entity or property that differs from what is typical or expected, or which differs from that predicted by a theoretical model. Can also be the measurement of the difference between a measured value and the expected values of a physical property.

Anomalies are of great interest in resource exploration because they often indicate mineral, hydrocarbon, geothermal or other prospects and accumulations, as well as geological structures such as folds and faults.

The AGMA is an integral part of the eFTG instrument design and measures Scalar Gravity. The design of the AGMA is such that the gravimeter is located on the same stabilised platform as the gradiometer, meaning that the measurements are all on the same axis.

The vertical acceleration due to the Earth’s gravity field. The acceleration of gravity at Earth’s surface is approximately 9.8 meters per second per second (32 feet per second per second). In other words, for every second an object is in free fall, its speed increases by about 9.8 meters per second. The absolute gravity value for a gravity survey area is obtained from the local base station, which is tied to the International Gravity Standardization Net.

An aeromagnetic survey is a common type of geophysical survey carried out using a magnetometer aboard or towed behind an aircraft. The basic principle is similar to a magnetic survey carried out with a hand-held magnetometer but allows much larger areas of the Earth’s surface to be covered quickly for regional reconnaissance. The aircraft typically flies in a grid-like pattern with height and line spacing determining the resolution of the data (and cost of the survey per unit area).

An on-board instrument that measures and records the airborne survey flight height above the ground surface (AGL). Laser or radar altimeters are used to keep the aircraft constantly within the range of the planned flight height.

Absolute Base is a regional Base Station where Absolute Gravity measurements are made. Absolute Gravity is a part of the national standardised network, which in turn, is a part of the world-wide International Standardised Gravity Net or Network.

AGRF (Australian Geomagnetic Reference Field). See International Geomagnetic Reference Field (IGRF)

Aliasing is a property of sampling data at discrete space intervals. In signal processing, it is the overlapping of frequency components resulting from a sample rate below the Nyquist rate. This overlap results in distortion or artifacts when the signal is reconstructed from samples which causes the reconstructed signal to differ from the original continuous signal. Aliasing that occurs in signals sampled in time, is referred to as temporal aliasing. Aliasing in spatially sampled signals is referred to as spatial aliasing.

Aliasing is generally avoided by applying low-pass filters or anti-aliasing filters (AAF) to the input signal before sampling and when converting a signal from a higher to a lower sampling rate. Suitable reconstruction filtering should then be used when restoring the sampled signal to the continuous domain or converting a signal from a lower to a higher sampling rate. For spatial anti-aliasing, the types of anti-aliasing include fast approximate anti-aliasing (FXAA), multisample anti-aliasing, and supersampling.

An Amplitude Anomaly is a local increase or decrease of the potential field amplitude caused by changes in the subsurface geology (distribution of susceptibility/density contrast values). As a rule, a high Amplitude Anomaly is generated by a magnetic or gravity contact. For example, in magnetic surveys the highest observed amplitude anomalies typically indicate lithologic boundaries (i.e., susceptibility contrasts) of igneous rocks within the sedimentary section and upper basement. Low amplitude anomalies usually indicate basement block structures such as horsts etc. Large regional amplitude anomalies (up to 100s of nT and mGal) in the shelf and continental margin areas are caused principally by the contact between oceanic and continental crust.

The AQG is a groundbreaking instrument based on quantum technologies. The AQG measures gravity using laser-cooled atoms. It accurately monitors the ballistic free-fall of these atoms in a vacuum, allowing precise inference of gravity acceleration.

It has several unique features such as being able to undertake absolute gravity measurement at the µGal level with no long-term drift. It can undertake automated continuous data acquisition for several months and has excellent immunity to ground vibrations (thanks to active compensation).

It is currently used for Geophysical research (volcano monitoring, earthquake studies) and can also be applied to hydrology and aquifer management.

Band-pass refers to a type of filter or a frequency range that allows certain frequencies to pass through while attenuating or rejecting others. In magnetic and gravity surveys, a band-pass approach may be used to enhance the interpretation of specific geophysical anomalies. By applying a band-pass filter to the data, certain anomalies associated with target geological structures or mineral deposits can be enhanced, allowing geophysicists to focus on relevant features.

In geology, the terms basement and crystalline basement are used to define the rocks below a sedimentary basin or cover, or more generally any rock below sedimentary rocks or sedimentary basins that are metamorphic or igneous in origin. In the same way, the sediments and/or sedimentary rocks above the basement can be called ‘sedimentary cover’.

This anomaly is named after a French mathematician Pierre Bouguer (1698–1758) who proved that gravitational attraction decreases with altitude. It is the remaining value of gravitational attraction after accounting for the theoretical gravitational attraction at the point of measurement, latitude, elevation, the Bouguer correction, and the free-air correction (which compensates for height above sea level assuming there is only air between the measurement station and sea level).

The Bureau Gravimetrique International (BGI) – WGM Release 1.0 (2012) World Gravity Map is the first release of high-resolution grids and maps of the Earth’s gravity anomalies (Bouguer, isostatic and surface free-air), computed at global scale in spherical geometry.

WGM2012 gravity anomalies are derived from the available Earth global gravity models EGM2008 and DTU10 and include 1’x1′ resolution terrain corrections derived from ETOPO1 model that consider the contribution of most surface masses (atmosphere, land, oceans, inland seas, lakes, ice caps and ice shelves). These products have been computed by means of a spherical harmonic approach using theoretical developments carried out to achieve accurate computations at global scale.

Boresight adjustment, also known as Boresighting, is a procedure used to align or calibrate the pointing direction of a sensor or instrument with respect to a reference coordinate system. The term “boresight” refers to the line of sight or optical axis of the sensor. Boresighting is commonly performed in remote sensing and optical instrumentation.

The purpose of boresight adjustment is to ensure that the sensor accurately points in a known direction, allowing for precise measurements or observations. Inaccurate boresight alignment can lead to errors in data collection, misinterpretation of results, or misalignment of targeting systems.

The Bell-Bloom Magnetometer is another type of caesium vapour magnetometer which modulates the light applied to the cell. The Bell-Bloom magnetometer is named after the two scientists who first investigated the effect.

If the light is turned on and off at the frequency corresponding to the Earth’s magnetic field there is a change in the signal visible at the photo detector. The associated electronics use this to create a signal exactly at the frequency that corresponds to the external field. Both methods lead to high performance magnetometers.

A Base Station in potential field geophysical surveying is a reference magnetic or gravity observation station. In airborne magnetic surveys it is used to monitor and record the Diurnal Variations and magnetic storms as well as providing GPS correction data.

A Barometric Altimeter is an instrument to measure and record the elevation above sea level with common accuracy of about 0.3m. Corrections must be made for spatial and temporal variations caused by weather conditions. Temperature and humidity corrections are also needed for precise ground elevations.

The Bouguer Correction is applied to gravity data to eliminate the gravitational effect of the subsurface mass between the point of measurement (for example a gravity station) and the datum (usually sea level). The Bouguer Correction formula can be presented as:

Bouguer Correction = 0.04192 Dh1 = – 0.01278 Dh2

where ‘D’ is the assumed average rock density between the station and datum elevations or, in the case of stations below the datum elevation, the assumed density of rock that is missing between the station and datum in g/cm3 ‘h1 is the elevation above sea level (or datum) or thickness of Bouguer Slab in metres; h2 is of the same meaning as h1 but in feet. For land and airborne surveys, the Bouguer Correction is always subtracted from the observed data.

A Bouguer Slab is an infinite length ‘slab’ of a finite thickness and assumed density (often 2.67g/cm3) between the point of measurement and datum (usually sea level). Bouguer Slab is also referred to as Bouguer Plate.

In the context of airborne geophysical surveying, a Bird is a streamlined cylindrical housing (suspended or towed from an aircraft by a cable) in which the sensors are mounted. To eliminate the magnetic effects of aircraft a bird is towed by a cable at a distance of 50-100m behind the aircraft. A fixed wing survey is commonly flown with sensors located within the airframe, stinger or sometimes, wings, while helicopters are more likely to tow instrumentation.

In the context of a seismic geophysical survey a ‘bird’ helps maintain the streamer’s depth and transmits compass data down the streamer to the acquisition system.

In geophysics, a “Calculation Surface” can refer to a specific elevation or depth level within the Earth’s subsurface at which geophysical calculations are conducted. For example, in gravity or magnetic data processing, geophysicists might work with data on different calculation surfaces to interpret and model subsurface geological structures or anomalies.

Carbon capture and storage (CCS) is a process in which a relatively pure stream of carbon dioxide (CO2) from industrial sources is separated, treated, and transported to a long-term storage location. For example, CO2 stream that is to be captured can result from burning fossil fuels or biomass. Usually, the CO2 is captured from large sources, such as a chemical plant or biomass plant, and then stored in an underground geological formation. The aim is to reduce greenhouse gas emissions and thus mitigate climate change.

The model, CRUST1.0, serves as starting model in a more comprehensive effort to compile a global model of Earth’s crust and lithosphere, LITHO1.0. CRUST1.0 is defined on a 1-degree grid and is based on a new database of crustal thickness data from seismic studies as well as from receiver function studies. In areas where such constraints are still missing, for example in Antarctica, crustal thicknesses are estimated using gravity constraints.

The calculated vertical gradient is a vertical gradient of the magnetic or gravity field not measured during the survey but calculated by applying various algorithms.

In this magnetization, the magnetic mineral surpasses a critical grain size, the blocking diameter, and grows through it. Until the required diameter is reached, the magnetic domain aligns itself with the Earth’s magnetic field. Once this diameter is crossed, the mineral grain locks the area and retains the magnetic remanence with stability for years to come.

Ferromagnetic minerals undergo chemical reactions including:

Chemical remanent magnetization occurs due to fluid migration, metamorphic processes, or while intrusive rocks cool slowly. When new magnetic minerals are formed, weathering processes also lead to CRM. For example, the formation of hematite from magnetite is due to CRM.

Complex Gradient is a 2D vector quantity corresponding to the resultant of the vertical and horizontal gradients. Complex Gradient is computed to interpret anomalies produced by dikes using characteristic points of their anomalies as well as phase plots.

A Caesium Magnetometer is an optically pumped magnetometer. The sensor outputs Larmor Frequency (See Larmor Signal) which is proportional to the total magnetic field. This frequency signal is then measured by the magnetometer processor electronics. A sensitivity of 0.001nT is typically achievable. The total field intensity range is about 20,000 – 100,000nT. For the Caesium – 133 isotope, the Gyromagnetic Ratio is about 82 times higher than that of the Proton Precession Magnetometer and is the reason that Caesium Magnetometers have a higher sensitivity.

The DC-3 aircraft, also known as the Douglas DC-3 or Dakota, was first developed and produced by the Douglas Aircraft Company in the 1930s and the design has been extensively modified and improved over the decades, resulting in an extremely safe and robust aircraft. It is widely regarded as one of the most significant transport aircraft in aviation history and played a crucial role in revolutionising air travel.

The SI unit of kilogram per cubic metre (kg/m3) and the cgs unit of gram per cubic centimetre (g/cm3) are the most commonly used units for density. One g/cm3 is equal to 1000 kg/m3. One cubic centimetre (abbreviation cc) is equal to one millilitre.

The de Havilland Canada DHC-6 Twin Otter is a Canadian STOL (Short Takeoff and Landing) utility aircraft developed by de Havilland Canada. The aircraft’s fixed tricycle undercarriage, STOL capabilities, twin turboprop engines, high rate of climb as well as its versatility and manoeuvrability have made it a successful surveying platform, particularly in areas with difficult flying environments.

A DEM is a 3D computer rendered representation of elevation data to represent terrain of the Earth’s surface. A “global DEM” refers to a discrete global grid. DEMs are used often in Geographic Information Systems (GIS) and are the most common basis for digitally produced relief maps. A Digital Terrain Model (DTM) represents specifically the ground surface while DEM and DSM may represent tree top canopy or building roofs.

While a DSM may be useful for landscape modelling, city modelling and visualisation applications, a DTM is often required for flood or drainage modelling, land-use studies and geological applications.

Earthline also uses DTMs to provide a terrain correction for gravity surveying.

The term ‘Dipole’ generally refers to a magnetic dipole, which is a simple model used to represent the magnetic field produced by a magnetic source. In geophysics, magnetic dipoles are often used to describe the magnetic field generated by certain geological structures or magnetic materials.

In magnetic surveys, magnetic dipoles are often used as a simplified model to represent certain geological structures, such as ore bodies, magnetic anomalies, or magnetic bodies with well-defined magnetic properties. By understanding and characterising the magnetic field produced by magnetic dipoles, geophysicists can interpret and analyse the magnetic data obtained during surveys to gain insights into the subsurface geology and potential mineral deposits.

This is a model of a geological structure in which layers or bodies of given lithologies are replaced by specified bodies of assumed density distribution.

The Magnetic Diurnal variation, also known as magnetic daily variation, is a fascinating phenomenon related to the Earth’s magnetic field. The diurnal variation refers to the oscillation of the Earth’s magnetic field with a periodicity of about a day. It occurs due to perturbations in the Earth’s ionosphere and beyond. This variation depends primarily on local time and geographic latitude.

The Earth’s magnetic field arises from the movement of convection currents in the conductive metal fluid of the outer core (composed of a Fe–Ni alloy and other lighter elements). On the Earth’s surface, it resembles a dipole field. External magnetic fields, resulting from interactions with the solar wind, contribute to the formation of the magnetosphere.

The diurnal variation of the magnetic field cannot be accurately predicted or modelled and for that reason, it is monitored during the magnetic surveys, usually by a stationary magnetometer. Marine surveys face practical challenges in monitoring diurnal variations due to the distance between the survey area, stationary magnetometers, and magnetic observatories. Researchers have explored using nearby magnetic observatories to estimate diurnal variation corrections in marine surveys. The approach involves comparing magnetic field values at different times and days, both before and after correction. The Pearson’s Correlation between raw data and diurnal-corrected data indicates the effectiveness of this method.

Equivalent Source processing, also known as the Equivalent Source Method (ESM) or Equivalent Layer technique, is a geophysical data processing and interpretation method used in potential field geophysics. It is primarily applied to magnetic and gravity data to model and interpret subsurface geological structures and density variations.

The concept behind the Equivalent Source processing is to represent the observed magnetic or gravity anomalies as if they were produced by a set of hypothetical point sources or “equivalent sources” located at a certain depth beneath the Earth’s surface. These equivalent sources mimic the observed anomalies, and by determining their distribution, depth, and strength, geophysicists can infer information about the subsurface geology.

An alternating or transient electrical current in a conductive medium in the presence of a time-varying magnetic field. The eddy current generates its own electromagnetic field.

Gravity gradiometers are instruments which measure the spatial derivatives of the gravity vector. The most frequently used and intuitive component is the vertical gravity gradient, Gzz, which represents the rate of change of vertical gravity (gz) with height (z). It can be deduced by differencing the value of gravity at two points separated by a small vertical distance, l, and dividing by this distance. The two gravity measurements are provided by accelerometers which are matched and aligned to an extremely high level of accuracy.

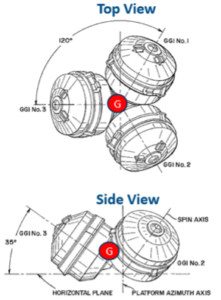

There are several types of Lockheed Martin gravity Full Tensor Gravity Gradiometers (FTG) currently in operation: the 3D Integrated Full Tensor Gravity Gradiometer (iFTG) and the Enhanced Full Tensor Gravity Gradiometer (eFTG) are exclusive to Earthline Consulting.

Substantial improvements in terms of instrument sensitivity and noise performance have more recently become available to industry in the form of an Enhanced Full-Tensor Gradiometer (eFTG). This full-tensor system is a scaled-up version of the FTG with increased sensitivity and lower noise to help resolve smaller and deeper geologic features. The initial Gzz noise performance of the eFTG was expected to be around 2.5 – 4 E/√Hz, but in commercial use, Earthline Consulting is often seeing noise levels around 2 E/√Hz during good flying conditions.

External Disturbance Fields are magnetic disturbance fields generated by electric currents flowing in the ionosphere and magnetosphere and “mirror-currents” induced in the Earth and oceans by the external magnetic field time variations. The disturbance field, which is associated with diurnal field variations and magnetic storms, is affected by solar activity (solar wind), the interplanetary magnetic field and the Earth’s magnetic field.

The external magnetic field exhibits variations on several time scales, which may affect the applicability of magnetic reference models. Very long-period variations are related to the solar cycle of about 11 years. Short-term variations result from daily changes in solar radiation, atmospheric tides and conductivity. Irregular time variations are influenced by the solar wind. Perturbed magnetic states, called magnetic storms, occur and show impulsive and unpredictable rapid time variations.

The Equipotential Method is a technique to map a potential field generated by stationary electrodes by moving an electrode around the survey area.

Equipotential methods involve mapping equipotential lines that result from a current. Distortions from a systematic pattern indicate the presence of a body of different resistivity. The mise-a-la-masse or ‘charged body potential’ method involves putting one current electrode in an ore body to map its shape and location.

The unit of gravity gradient is the eotvos (abbreviated as E). The eotvos is defined as 10−9galileos per centimetre. A person walking past at a distance of 2 metres would provide a gravity gradient signal of approximately one E. In SI units or in cgs units, 1 eotvos = 10−9 second−2 . Large physical bodies such as mountains can give signals of several hundred Eotvos. The eotvos unit is named for the Hungarian physicist Baron Loránd Eötvös de Vásárosnamény, who made pioneering studies of the gradient of the Earth’s gravitational field.

The Lockheed Martin Enhanced Full Tensor Gravity Gradiometer (eFTG) is the 4th generation and worlds most advanced moving-base gravity gradiometer, possessing a noise floor about three times lower than the Full Tensor Gradiometer (FTG) and providing data with higher bandwidth. This instrument has been used in geophysical surveys since 2022 and is exclusive to Earthline Consulting.

These improvements mean eFTG data have increased accuracy and higher spatial resolution, therefore widening the range of geological targets that can be mapped with gravity gradiometry.

The eFTG system combines the best design elements of previous gradiometers, essentially comprising three digital partial tensor discs/GGIs mounted in an FTG configuration. This means the eFTG GGIs have eight accelerometers per disc with a measurement baseline roughly double that of the FTG accelerometer separation. The increase in accelerometer count and larger baseline means the eFTG has a threefold improvement in S/N over the entire bandwidth. With 24 accelerometers the eFTG provides 12 gravity gradient outputs per measurement location (the eFTG essentially measures the full tensor twice and with double the accuracy in each case).

The Advanced Gravity Measurement Assemblage (AGMA) is an integral part of the eFTG instrument design and measures Scalar Gravity. The design of the AGMA is such that the gravimeter is located on the same stabilised platform as the gradiometer, meaning that the measurements are all on the same axis.

With its increased capability and performance, the eFTG benefits apply throughout the entire geological section, helping to deliver a more accurate Earth model. The greater sensitivity of the instrument allows the detection of smaller geological features with subtler density contrasts and improves the application of mapping structure in deeper basins. Surveys can be done more cost-effectively since the increased S/N means that the line spacing can be increased in some geological scenarios. The higher bandwidth and increased spatial resolution of data means these data can be more tightly integrated with seismic data than before; applications include joint gravity-seismic inversions and the ability to quality-control and refine seismic velocities. eFTG data also can be used ahead of a seismic survey, providing a highly detailed map on which to precisely locate seismic lines in the optimal locations.

The EGG was an evolution of technology originally developed for the European Space Agencyand was later developed by ARKeX (a corporation that is now defunct). It used two key principles of superconductivity to deliver its performance: the Meissner effect, which provides levitation of the EGG proof masses and flux quantization, giving the EGG its inherent stability. The EGG was specifically designed for high dynamic survey environments but was never deployed in commercial operations.

This is the gravity gradiometer deployed on the European Space Agency’s Gravity Field and Steady-State Ocean Circulation Explorer (GOCE) mission. It is a three-axis diagonal gradiometer based on three pairs of electrostatic servo-controlled accelerometers.

The ETC is a time-varying correction applied to gravity data to compensate for Earth tides. ETC is included as a part of the Drift Correction and referred to as Tidal Corrections.

Earth tides are a time – varying response of the solid Earth’s surface to the tidal influences of the moon and sun. Earth tides can produce displacement up to about 10cm and generate an anomaly of about 0.2-0.3 mGal. The magnitude of Earth tides depends on latitude and time.

Edge Effects are distortions of data which appear at the edges of grid images after applying filter operators or other grid, or line transformations. Assigning Taper or applying Data Extension and Edge Smoothing Filters allows Edge Effects to be minimized.

A pre-survey study to assess the suitability of geophysical techniques to achieve the survey objectives. Different platforms, instruments, terrain line spacing etc. can be considered. Earthline’s feasibility studies usually take the form of a 2D section(s) developed into a full 3D earth model, to allow a realistic, simulated survey response to be calculated.

The term “Figure of Merit” (FOM) is used in various fields to quantify the performance, quality, or effectiveness of a particular system, process, or measurement. It is a numerical value or metric that provides an objective way to compare different alternatives, evaluate the efficiency of a system, or assess the success of an operation.

In geophysical exploration, FOM is employed to assess the success of surveys or measurements. For instance, in geophysical data processing, the FOM may be used to evaluate the signal-to-noise ratio or the resolution of the data.

In geophysics, the Free-air Gravity Anomaly, often simply called the Free-air Anomaly, is the measured gravity anomaly after a free-air correction is applied to account for the elevation at which a measurement is made. It does so by adjusting the measurements of gravity to what would have been measured at a reference level, which is commonly taken as mean sea level or the Geoid.

In the case of gravity and magnetic airborne surveying, a line plan is a survey design used to minimise terrain clearance throughout the survey area of interest (AOI). It is important in gravity surveying in particular to try to acquire data as close to the ground as is possible after taking into account all safety considerations. Typically, surveys are planned for a 120m constant flight height, but the actual terrain clearance in hilly or mountainous areas will be higher. To achieve the optimal survey line plan in areas with topography, a 3D Drape Analysis is undertaken which allows for a more flexible acquisition footprint to achieve the optimal terrain clearance within the constraints of the topography. The main survey transact lines are known as Cross Lines and Tie Lines are also planned for and are primarily used to level magnetics and long wavelength gravity data from the AGMA.

Forward modelling in 2D is a widely used and a critical method to integrating potential field and seismic data. It is a convenient method to identify sources of anomalies where constraints exist. It also allows any number of simple model scenarios to be tested.

In physics, the Fourier transform (FT) is a transform that converts a function into a form that describes the frequencies present in the original function. The output of the transform is a complex-valued function of frequency. The term Fourier transform refers to both this complex-valued function and the mathematical operation. When a distinction needs to be made, the Fourier transform is sometimes called the frequency domain representation of the original function.

The Fourier analysis of gravity data is a geophysical data processing technique used to analyse and interpret gravity data obtained from gravity surveys. Fourier analysis is a mathematical method that decomposes complex functions or data sets into a series of simple sinusoidal functions, known as sine and cosine functions. In the context of gravity data, Fourier processing involves transforming the gravity measurements from the spatial domain (latitude, longitude, and elevation) to the frequency domain using Fourier transforms. Fourier analysis is particularly useful for detecting anomalies associated with geological features, such as faults, salt domes, or mineral deposits, which exhibit distinct spatial frequency patterns in the gravity field.

To ensure the accuracy and reliability of the data collected during airborne geophysical surveys, flight calibrations are performed before and after each survey flight. Flight calibrations involve various procedures to establish baseline measurements, account for instrument drift, and validate the data quality.

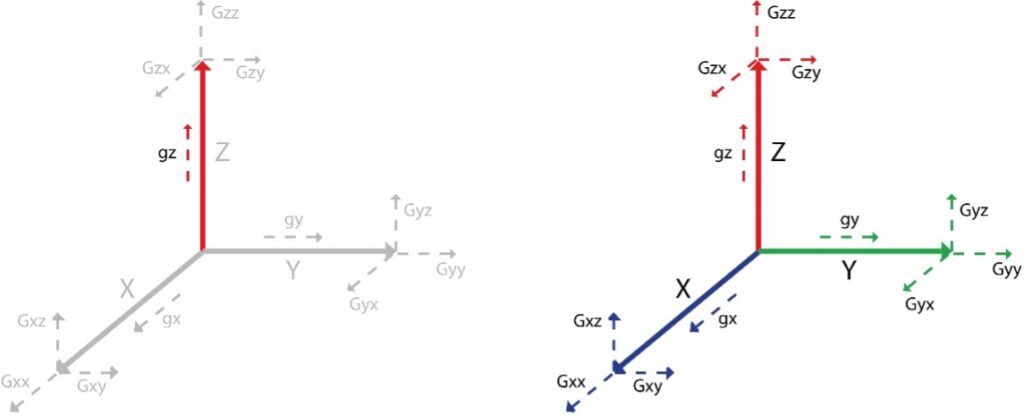

Full Tensor Gradiometers measure the rate of change of the gravity vector in all three perpendicular directions giving rise to a Gravity Gradient Tensor.

Conventional gravity measures ONE component of the Gravity Field in the Vertical Direction (Gz) (LHS), Full tensor gravity gradiometry measures ALL components of the gravity field (RHS)

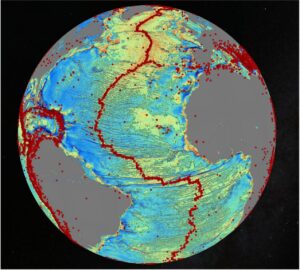

Gravity gradiometry is a well-established geophysical technique that is often used in the search for natural resources and potential sites for CCS. The technology measures small differences in the Earth’s gravity field associated with changes in subsurface geology.

Lockheed Martin is the only company to provide commercial moving-base gravity gradiometers and, until 2020, broadly offered two types of gravity gradiometer to the exploration industry: the full-tensor gravity gradiometer (FTG) system, which is deployed in both airborne and marine modes, and the partial tensor system, typically deployed in airborne mode only. By their intrinsic design, these systems have a greatly improved resolution when compared to conventional (scalar) gravimeters and thus provide a natural advantage when used in exploration. In 2020, the Enhanced Full Tensor Gravity Gradiometer (eFTG) which is the 4th generation and most advanced system was developed. This system is used exclusively by Earthline Consulting.

In addition, geoscientists can benefit from having multidirectional gravity gradients as they yield extra information on geometrical and density changes in subsurface geology that give rise to gravity anomalies. Historically, these gravity gradiometers have been widely used in a variety of geological settings to rapidly screen petroleum basins and assist with the mapping of basin architecture, basement depth, faults and intra-sedimentary structure. They can also be used in mineral exploration and the search for renewables such as sites for geothermal energy development.

The fluxgate magnetometer was invented by H. Aschenbrenner and G. Goubau in 1936. A team at Gulf Research Laboratories led by Victor Vacquier developed airborne fluxgate magnetometers to detect submarines during World War II and after the war confirmed the theory of plate tectonics by using them to measure shifts in the magnetic patterns on the sea floor.

A fluxgate magnetometer consists of a small magnetically susceptible core wrapped by two coils of wire. An alternating electric current is passed through one coil, driving the core through an alternating cycle of magnetic saturation.

This constantly changing field induces a voltage in the second coil which is measured by a detector. In a magnetically neutral background, the input and output signals match. However, when the core is exposed to a background field, it is more easily saturated in alignment with that field and less easily saturated in opposition to it. Hence the alternating magnetic field and the induced output voltage, are out of step with the input current. The extent to which this is the case depends on the strength of the background magnetic field. Often, the signal in the output coil is integrated, yielding an output analogue voltage proportional to the magnetic field.

A wide variety of sensors are currently available and used to measure magnetic fields. Fluxgate compasses and gradiometers measure the direction and magnitude of magnetic fields. Fluxgates are affordable, rugged and compact with miniaturization recently advancing to the point of complete sensor solutions in the form of IC chips. This, plus their typically low power consumption makes them ideal for a variety of sensing applications. Fluxgate Gradiometers are commonly used for archaeological prospecting and unexploded ordnance (UXO) detection.

A free water gravity anomaly is obtained with the use of the Towed Deep Ocean Gravimeter and corrected for the free-water gradient, latitude effect, vertical acceleration, Eotvos effect and solid-earth tides.

A geographic information system (GIS) consists of integrated computer hardware and software that store, manage, analyse, edit, output, and visualise geographical data (usually geospatial in nature: GPS, remote sensing, etc.) The core of any GIS is a spatial database that contains representations of geographical phenomena, modelling their geometry (location and shape) and their properties or attributes. A GIS database may be stored in a variety of forms, such as a collection of separate data files or a single spatially enabled relational database.

Geophysics is a branch of natural science concerned with the physical processes and physical properties of the Earth and the use of quantitative methods for its analysis. Geophysicists, who usually study geophysics, physics, or one of the earth sciences at the graduate level, complete investigations across a wide range of scientific disciplines. The term geophysics classically refers to solid earth applications: Earth’s shape; its gravitational, magnetic fields, and electromagnetic fields; its internal structure and composition.

Gravity forward modelling (GFM) is the Computation of the gravity field of some given mass distribution. Forward Modelling of gravity is a technique used in geophysics to predict the gravitational field produced by subsurface structures or distributions of mass. This method is employed to understand the subsurface composition and geological features by simulating how gravity behaves due to various subsurface arrangements.

The unit of gravity gradient is the eotvos (abbreviated as E), which is equivalent to 10−9 s−2 (or 10−4 mGal/m). A person walking past at a distance of 2 metres would provide a gravity gradient signal of approximately one E. Large physical bodies such as mountains can give signals of several hundred Eotvos.

An instrument used to measure the acceleration due to gravity, or, more specifically, variations in the gravitational field between two or more points. The change from calling a device an “accelerometer” to calling it a “gravimeter” occurs at approximately the point where it has to make corrections for earth tides.

Gravity measurements are a reflection of the earth’s gravitational attraction, its centripetal force, tidal accelerations due to the sun, moon, and planets, and other applied forces.

Horizontal and Vertical Gradients, and Filters based on them such as the analytic signal, tilt angle, theta map and so on, as edge detection play an important role in the interpretation and analysis of gravity field data. Normalised Derivatives methods are used to equalise signals from sources buried at different depths.

Gravity Gradiometry is the study and measurement of variations (anomalies) in the Earth’s gravity field.

Gravity gradiometers are instruments which measure the spatial derivatives of the gravity vector. The most frequently used and intuitive component is the vertical gravity gradient, Gzz, which represents the rate of change of vertical gravity (gz) with height (z). It can be deduced by differencing the value of gravity at two points separated by a small vertical distance, l, and dividing by this distance. The two gravity measurements are provided by accelerometers which are matched and aligned to an extremely high level of accuracy. Earthline Consulting uses the latest generation Lockheed Martin Gravity Gradiometers.

The Gravity Gradient Tensor is the spatial rate of change of gravitational acceleration; as acceleration is a vector quantity, with magnitude and three-dimensional direction. The full gravity gradient is a 3×3 tensor.

Three-dimensional gravity inversion is an effective way to extract subsurface density distribution from gravity data. Machine-learning-based inversion is a newer data-driven method for mapping the observed data to a 3D model.

The Global Positioning System (GPS) is a satellite-based radio navigation system owned by the United States government and operated by the United States Space Force. It is one of the Global Navigation Systems (GNSS) that provides geolocation and time information to a GPS receiver anywhere on or near the Earth where there is an unobstructed line of sight to four or more GPS satellites.

It does not require the user to transmit any data, and operates independently of any telephonic or Internet reception, though these technologies can enhance the usefulness of the GPS positioning information. It provides critical positioning capabilities to military, civil, and commercial users around the world. Although the United States government created, controls and maintains the GPS system, it is freely accessible to anyone with a GPS receiver.

Ground-penetrating radar GPR is a geophysical method that uses radar pulses to image the subsurface. It is a non-intrusive method of surveying the sub-surface to investigate a variety of media, including rock, soil, ice, fresh water, and man-made structures. In the right conditions GPR can be used to detect subsurface objects, changes in material properties, and voids and cracks.

Individual lines of GPR data represent a sectional (profile) view of the subsurface. Multiple lines of data systematically collected over an area may be used to construct three-dimensional or tomographic images. Data may be presented as three-dimensional blocks, or as horizontal or vertical slices. Horizontal slices (known as “depth slices” or “time slices”) are essentially planview maps isolating specific depths.

The GOCE was the first of the European Space Agency’s (ESA’s) Living Planet Programme heavy satellites intended to map in unprecedented detail the Earth’s gravity field. The spacecraft’s primary instrumentation was a highly sensitive gravity gradiometer consisting of three pairs of accelerometers which measured gravitational gradients along three orthogonal axes known as the Gravitec gravity gradiometer.

Launched in 2009, GOCE mapped the deep structure of the Earth’s mantle and probed hazardous volcanic regions. By combining the gravity data with information about sea surface height gathered by other satellite altimeters, scientists were able to track the direction and speed of geostrophic ocean currents. The low orbit and high accuracy of the system greatly improved the known accuracy and spatial resolution of the geoid (the theoretical surface of equal gravitational potential on the Earth). The satellite began dropping out of orbit and made an uncontrolled re-entry on 11 November 2013.

A gyroscopic or inertial platform is a system using gyroscopes to maintain a platform in a fixed orientation in space despite the movement of the vehicle that it is attached to. These can then be used to stabilise sensitive instruments. See Stabilised or Inertial Platform for context in FTG surveying.

Geothermal energy is thermal energy extracted from the Earth’s crust. It combines energy from the formation of the planet and from radioactive decay. Geothermal energy has been exploited as a source of heat and/or electric power for millennia.

Geothermal heating, using water from hot springs, for example, has been used since Roman times. Geothermal power, (generation of electricity from geothermal energy), has been used since the 20th century. Unlike wind and solar energy, geothermal plants produce power at a constant rate, without regard to weather conditions. Geothermal resources are theoretically more than adequate to supply humanity’s energy needs. Most extraction occurs in areas near tectonic plate boundaries and often associated with volcanism.

The cost of generating geothermal power decreased by ~25% during the 1980s and 1990s. Technological advances have continued to reduce costs and thereby expand the amount of viable resources. As of 2010, geothermal electricity has been generated in over 26 countries.

Earthline has been flying geophysical surveys to help identify areas suitable for Geothermal energy development using eFTG technology for both Governments and for energy companies.

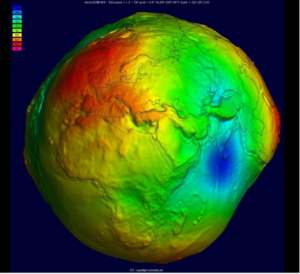

The Geoid is the shape that the ocean surface would take under the influence of the gravity of Earth, including gravitational attraction and Earth’s rotation if other influences such as winds and tides were absent. This surface is extended through the continents (such as with very narrow hypothetical canals) and can be defined on land as the surface which the ocean water would assume if it could reach its own level everywhere.

Gauss described it as the “mathematical figure of the Earth”, a smooth but irregular surface whose shape results from the uneven distribution of mass within and on the surface of Earth. It can be known only through extensive gravitational measurements and calculations. Despite being an important concept for almost 200 years in the history of geodesy and geophysics, it has been defined to high precision only since advances in satellite geodesy in the late 20th century.

The Geoid surface is irregular, unlike the Reference Ellipsoid (which is a mathematical idealised representation of the physical Earth as an ellipsoid) but is considerably smoother than Earth’s physical surface. Although the “ground” of the Earth has excursions on the order of +8,800 m (Mount Everest) and −11,000 m (Marianas Trench), the geoid’s deviation from an ellipsoid range from +85 m (Iceland) to −106 m (southern India), less than 200 m total.

If the ocean were of constant density and undisturbed by tides, currents or weather, its surface would resemble the geoid. The permanent deviation between the geoid and mean sea level is called Ocean Surface Topography.

An excellent source of existing global gravity field models and their visualisation is provided by the ICGEM (https://icgem.gfz-potsdam.de/home) which is one of five services coordinated by the International Gravity Field Service (IGFS) of the International Association of Geodesy (IAG).

Hyperspectral imaging collects and processes information from across the electromagnetic spectrum. The goal of hyperspectral imaging is to obtain the spectrum for each pixel in the image of a scene, with the purpose of finding objects, identifying materials, or detecting processes.

Hyperspectral sensors look at objects using a vast portion of the electromagnetic spectrum. Certain objects leave unique ‘fingerprints’ in the electromagnetic spectrum. Known as spectral signatures, these ‘fingerprints’ enable identification of the materials that make up a scanned object. For example, a spectral signature for oil helps geologists find new oil accumulations.

Magnetic surveys can suffer from noise coming from a range of sources. Different magnetometer technologies suffer different kinds of noise problems. Heading errors are one group of noise. They can come from three sources:

Some total field sensors give different readings depending on their orientation. Magnetic materials in the sensor itself are the primary cause of this error. In some magnetometers, such as the Vapor Magnetometers (caesium, potassium, etc.), there are sources of heading error in the physics that contribute small amounts to the total heading error.

Console noise comes from magnetic components on or within the console. These include ferrite in cores in inductors and transformers, steel frames around LCDs, legs on IC chips and steel cases in disposable batteries. Some popular MIL (Milnec Interconnect System) spec connectors also have steel springs. Operators must take care to be ‘magnetically clean’.

The magnetic response (noise) from ferrous object on the operator and console can change with heading direction because of induction and remanence. Aeromagnetic survey aircraft and quad bike systems can use special compensators to correct for heading error noise.

Heading errors usually look like ‘herringbone patterns’ in survey images. Alternate lines can also be ‘corrugated’.

The half width method is a graphical method of estimating the source depth of an isolated gravity or magnetic anomaly.

The Halo Effect is an outer short-wavelength curvilinear edge of the opposite sign adjacent to the filtered anomaly. The Halo Effect represents the artificial opposite field curvature around the periphery of that anomaly due to convolution with a filter operator. Halo Effects usually appears on the grid data images after applying high-resolution band-pass or derivative filtering procedures to the observed potential field data. Halo Effects are the side effect of an increased lateral resolution, which at the same time makes enhanced residual anomalies more visible and hence, better correlatable.

The Hannel Method is a graphical method used for estimating the source depth of an isolated magnetic anomaly. For the magnetic pole model, (depth = half horizontal distance at the level of 1/3rd maximum amplitude).

The Hilbert Transform Filter (HTF) is a space domain convolution filter which is applied in order to obtain the component of the observed potential field data from line datasets using a finite difference operator. For better results, detrending is recommended before HTF. The filter result is combined with the real component of data to calculate instantaneous phase and instantaneous frequency.

The Heading is the direction of the survey aircraft or ship, as indicated by compass readings.

The Heading Error Test is one of the on-site magnetometer calibrations made by magnetic field test measurements along at least four flight lines, oriented in the direction of survey lines and flown above the area of low gradient of the Earth’s magnetic field. The heading variations are determined from comparison of readings obtained during the flight along these four lines.

The stabilized platforms used in marine and airborne gravimeters behave like damped long‐period pendulums. When subjected to horizontal accelerations they tilt, resulting in gravimeter reading errors.

The amount of tilt depends on the ratio of the period of the horizontal motion to that of the platform and is negligible if this ratio is less than about 0.1 (LaCoste, 1967). The Horizontal Acceleration Correction is applied to airborne gravity data to compensate for the stabilised platform tilt and corresponding errors caused by the aircraft’s horizontal accelerations.

Horizontal Geomagnetic Intensity is the magnitude of the horizontal component of the Geomagnetic Field Vector at the point of measurement. The intensity of the field is often measured in gauss (G) but is generally reported in microteslas.

A Hamming Filter is an edge smoothing spectral domain grid filter that modifies grid surface values to ensure their smooth transition to zero at the edges of a grid.

An early form (1932) of stable gravimeter consisting of a weight suspended from a spiral spring, a hinged lever, and a compensating spring for restoring the system to a null position.

The Hayford modification was named after John Filmore Hayford, an American geodesist who lived from 1868 to 19251. His work significantly contributed to the field of geodesy.

The Hayford modification is an isostatic hypothesis that builds upon the Pratt Hypothesis. In this modified model, the pressure is balanced at a specific depth known as the “depth of compensation”. This is a modification of the concept of the gravitational (isostatic) equilibrium between crust and mantel. It assumes that the gravitational load of accumulated sediments is balanced in all sedimentary basins at a certain depth of compensation: the heavier the sedimentary load, the larger the depth of compensation.

A Hysteresis Loop, also known as a Hysteresis Curve, is a four-quadrant graph that illustrates the relationship between the induced magnetic flux density (denoted as B) and the magnetizing force (denoted as H). This loop provides valuable insights into the magnetic properties and behaviours of a material, including its retentivity, coercive force and residual magnetism.

A Hartley Transform is a mathematical operation which is used to convert the observed line data from their original space domain to the equivalent spectral domain.

The main metric for estimating FTG data noise is the normalised inline sum:

𝑛𝐼𝐿𝑆=(𝐼1+𝐼2+𝐼3)/√3.

In this summation, the tensor signals that make up the inline components of differential curvature (I1, I2, I3) cancel, leaving a quantity that reflects the overall noise of the eFTG across its 3 Gravity Gradient Instruments (GGIs). The normalisation factor of 1 / 3 gives the noise per eFTG channel.

The IOGP is the petroleum industry’s global forum in which members identify and share best practices to achieve improvements in health, safety, the environment, security, social responsibility, engineering and operations.

The IAGSA promotes the safe operation of helicopters and fixed-wing aircraft on airborne geophysical surveys. Member companies conduct low-level survey flights and are committed to safe aircraft operations. The association develops recommended practices, serves as a centre for exchange of safety information and as a repository for operational statistics.

International Traffic in Arms Regulations (ITAR) is a United States regulatory regime to restrict and control the export of defence and military related technologies to safeguard U.S. national security. This essentially means that certain technologies, including the LHM gradiometers are regulated under the ITAR rules because of military applications and therefore cannot be used to survey in certain countries. The Export Administration Regulations (EAR) are a set of United States export guidelines and prohibitions administered by the Bureau of Industry and Security which are associated with ITAR.

Intersection analysis is a spatial analysis technique used in geographic information systems (GIS) and other fields to examine the relationships between different spatial datasets and identify common features or areas of overlap. The primary goal of intersection analysis is to determine where spatial entities from two or more datasets intersect or overlap, providing valuable insights into their spatial relationships and characteristics.

The International Gravity Standardization Net (1971) is a world-wide network of gravity base stations providing a reference frame for the absolute gravity measurements using the same type of gravimeters. It supersedes the Potsdam system that was defined by pendulum measurements in 1906. The International Gravity Standardization Net was adapted by the International Union of Geodesy and Geophysics at Moscow in 1971, the network was result of extensive cooperation by many organizations and individuals around the world. The network contains more than 1800 stations globally. The data used in the adjustment included more than 25,000 gravimetry, pendulum and absolute measurements.

The Ingenuity helicopter nicknamed Ginny, is an autonomous NASA helicopter that operated on Mars from 2021 to 2024 as part of the Mars 2020 mission. It is the first manmade aircraft to have flown in an alien atmosphere and could be said to have conducted the first ‘off-world’ aerial survey. Whilst not a geophysical surveying craft it is equipped with two commercial-off-the-shelf (COTS) cameras: a high-resolution Return to Earth (RTE) camera and a lower resolution navigation (NAV) camera. The NAV camera operates continuously throughout each flight, with the captured images used for visual odometry to determine the aircraft’s position and motion during flight. As of December 16, 2021, over 2,000 black-and-white images from the navigation camera and 104 colour images from the terrain camera (RTE) have been published by NASA.

Ingenuity was designed by NASA’s Jet Propulsion Laboratory (JPL) in collaboration with AeroVironment, NASA’s Ames Research Center and Langley Research Centre with some components supplied by Lockheed Martin Space, Qualcomm, and SolAero.

Data collected by Ingenuity are intended to support the development of future ‘off-world’ helicopters capable of carrying larger payloads (perhaps even geophysical sensors!)

Igneous Rocks Susceptibility is the basic quantity that predetermines the magnetic properties of igneous rocks. Igneous Rocks Susceptibility values are much higher (often 50-100 times higher) than those of metamorphic and sedimentary rocks. A generalised table of the common Igneous Rocks Susceptibility values is shown below (units of 103 SI)

Rock Type | Range | Average |

Andesite | 85-165 | 160 |

Basalt | 0.2-175 | 70 |

Diabase | 1.0-160 | 55 |

Diorite | 0.6-120 | 85 |

Dolorite | 1.0-35 | 17 |

Gabbro | 1.0-90 | 70 |

Granite | 0-50 | 2.5 |

Peridote | 90-200 | 150 |

Porphyry | 0.3 – 200 | 60 |

Table of common Igneous Rocks Susceptibility values

The IGRF is a time-varying magnetic field which represents the Earth’s core component (i.e. the Earth’s main magnetic field) of the observed magnetic data. IGRF is computed from data by the worldwide magnetic observatories and orbiting satellite mou8nted magnetometers. It is updated every five years. Generally, after a magnetic survey has been completed, IGRF is subtracted from the observed data to obtain the crustal component of the magnetic field (i.e. the Earth’s local magnetic field). The most precise way to correct for IGRF is to compute its values for each point in the survey and subtract proper values on a point-by-point basis. If the survey is completed within a few days, then computing IGRF as a grid may be adequate. The IGRF value can vary by 1-8 nT/month depending upon the location. IGRF is sometimes referred to as Normal Magnetic Field.

A Jacobsen Filter is a Spectral Domain filter that enhances the residual components of the gravity or magnetic fields by calculating the differences between two Wiener Filter based upward continuations or applying the modified Upward Continuation in accordance with a specified ‘pass-above’ or ‘pass-below’ depth. The Jacobsen Filter is also referred to as a Separation Filter.

The term ‘Juvenile’, when applied to water, gas or ore-forming fluids originating from magma as opposed to fluids of surface origin. Juvenile fluids may significantly contribute to the magnetization of intra-sedimentary and intra-basement faults or fracture zones. Juvenile water is also referred to as Plutonic Water.

Date of the magnetic or gravity survey observations recorded by the digital acquisition system as a four-digit value, which represents Julian Day and the year of the survey. For example, the value of 3399 (or 9933) corresponds to February 2,1999.

Julian Day is the day’s ordinal number since January 1 of the current year. Julian Day is used as a standard input parameter for digital records of the magnetic or gravity acquisition data. See Julian Date.

Juxtaposition is a term used to describe the side-by-side position of specified tectonic blocks, magnetic terrains and other subsurface structural elements identified by potential field data interpretation.

The K-index, and by extension the Planetary K-index, was introduced by Julius Bartels in 1938. The indexes are used to characterise the magnitude of geomagnetic storms. Kp is an excellent indicator of disturbances in the Earth’s magnetic field and is used by organisations such as the US Space Weather Prediction Centre (SWPC) to decide whether geomagnetic alerts and warnings need to be issued for users who are affected by these disturbances.

The estimated Planetary K-index operates on a scale from 0-9 with 1 being calm and 5 or more indicating a geomagnetic storm and is updated every minute. The Estimated 3-hour Planetary Kp-index is derived at the NOAA SWPC using data from ground-based magnetometer sites throughout North America, UK, Germany, and Australia. These data are made available through cooperation between the SWPC and data providers around the world, which currently includes the U.S. Geological Survey (USGS), Natural Resources Canada (NRCAN), the British Geological Survey (BGS), the German Research Centre for Geosciences (GFZ), and Geoscience Australia (GA).

The principal users affected by geomagnetic storms are electrical power grid generators, space program operators, users of radio signals that reflect off or pass through the ionosphere, and users of GPS systems, including airborne survey companies.

Karst is a type of topography formed in areas of widespread carbonate rocks such as limestone, dolomite, and gypsum through dissolution. It is characterized by features like poljes above and drainage systems with sinkholes and caves underground.

Subterranean drainage may limit surface water, with few to no rivers or lakes. In regions where the dissolved bedrock is covered (perhaps by debris) or confined by one or more superimposed non-soluble rock strata, distinctive karst features may occur only at subsurface levels and can be totally missing above ground.

The study of paleokarst (buried karst in the stratigraphic column) is important in petroleum geology because as much as 50% of the world’s hydrocarbon reserves are hosted in carbonate rock, and much of this is found in porous karst systems. Paleokarst can often be detected by Gravity surveys.

Kriging is a statistical technique used with variograms, or two-point statistical functions that describe the increasing difference or decreasing correlation between sample values as separation between them increases, to determine the value of a point in a heterogeneous grid from known values nearby.

The theoretical basis for the method was developed by the French mathematician Georges Matheron in 1960, based on the thesis of Danie G. Krige, the pioneering plotter of distance-weighted average gold grades at the Witwatersrand reef complex in South Africa. Though computationally intensive in its basic formulation, kriging can be scaled to larger problems using various approximation methods.

Although kriging was developed originally for applications in geostatistics, it is a general method of statistical interpolation and can be applied within any discipline to sampled data from random fields that satisfy the appropriate mathematical assumptions. It can be used where spatially related data has been collected (in 2-D or 3-D) and estimates of “fill-in” data are desired in the locations (spatial gaps) between the actual measurements.

Kriging has been used in a variety of disciplines, including environmental science, hydrogeology, mining, natural resources, remote sensing and the prediction of oil production curves for shale oil wells.

Keating’s method utilizes a simple pattern recognition technique to locate magnetic anomalies that resemble the response of a modelled kimberlite pipe.

The magnetic response of a vertically dipping cylinder is computed in grid form. The model parameters that may be adjusted, include the depth, radius and length of the cylinder, the local magnetic inclination and declination, and the spatial extent of the anomaly. The model grid is then passed over a grid of total magnetic intensity as a “moving window”. The correlation between modelled and observed data is computed at each grid node using a first-order regression and then archived. The correlation coefficients that exceed a specific threshold (e.g., 75%) are retained for comparison to the magnetic and other exploration data.

A Kimberlite Pipe is a carrot or mushroom shaped, more of less vertical magmatic intrusion originating a depth (~150km), which sometimes contains diamonds. It is named after the town of Kimberley in South Africa, where the discovery of an 83.5-carat (16.70 g) diamond called the Star of South Africa in 1869 spawned a diamond rush and the digging of the open-pit mine called the Big Hole.

The morphology of kimberlite pipes and their classical carrot shape is the result of explosive diatreme volcanism from very deep mantle-derived sources. These volcanic explosions produce vertical columns of rock that rise from deep magma reservoirs. The eruptions forming these pipes fracture the surrounding rock as it explodes, bringing up unaltered xenoliths of peridotite to surface. These xenoliths provide valuable information to geologists about mantle conditions and composition.

Kimberlites are peculiar igneous rocks because they contain a variety of mineral species with chemical compositions that indicate they formed under high pressure and temperature within the mantle. These minerals, such as chromium diopside (a pyroxene), chromium spinels, magnesian ilmenite, and pyrope garnets rich in chromium, are generally absent from most other igneous rocks, making them particularly useful as indicators for kimberlites.

The Koenigsberger ratio is the proportion of remanent magnetization relative to induced magnetization in natural rocks. It was first described by J.G. Koenigsberger. It is a dimensionless parameter often used in geophysical exploration to describe the magnetic characteristics of a geological body to aid in interpreting magnetic anomaly patterns.

| Q | Königsberger Ratio |

| remanent magnetization |

| induced magnetization | |

| χ | the magnetic susceptibility; the influence of an applied magnetic field on a material |

| H | the macroscopic magnetic field |

The total magnetization of a rock is the sum of its natural remanent magnetization and the magnetization induced by the ambient geomagnetic field. Thus, a Koenigsberger ratio, Q, greater than 1 indicates that the remanence properties contribute the majority of the total magnetization of the rock.

The Koefoed Method is a 2D technique used for estimating the depth of a magnetic source. It relies on the horizontal distance between points 1/2 and 3/4 of the maximum anomaly value. In other words, it helps determination of the depth of a magnetic feature based on specific measurements from the magnetic field.

Laplacian Gridding is a technique used in potential field geophysics, to interpolate irregularly spaced data onto a regular grid. It is commonly applied in gravity and magnetic data processing, where irregularly spaced measurements are collected during airborne or ground-based surveys.

The Laplacian gridding method derives its name from the Laplacian Operator, which is a mathematical operator used to calculate the divergence or curvature of a scalar field. In the context of gridding, the Laplacian operator is applied to the irregularly spaced data to estimate the values at grid nodes.

LiDAR is a method for determining ranges by targeting an object or a surface with a laser and measuring the time for the reflected light to return to the receiver. LiDAR may operate in a fixed direction (e.g., vertical) or it may scan multiple directions, in which case it is known as LiDAR scanning or 3D laser scanning, a special combination of 3-D scanning and laser scanning. LiDAR has terrestrial, airborne, and mobile applications.

Airborne LiDAR is when a laser scanner, while attached to an aircraft during flight, creates a 3-D point cloud model of the landscape. This is currently the most detailed and accurate method of creating Digital Elevation Models.

Lockheed Martin Corporation (LHM) is an American aerospace, arms, defence, information security, and technology corporation operating worldwide. It was formed by the merger of Lockheed Corporation with Martin Marietta in March 1995. It is headquartered in the Washington, D.C. area in the US. They also manufacture the most advanced Gravity Gradiometers in the world.

During the 1970s, as an executive in the US Dept. of Defence, John Brett initiated the development of the gravity gradiometer to support the Trident 2 system. A committee was commissioned to seek commercial applications for the Full Tensor Gradient (FTG) system that was being deployed on US Navy Ohio-class Trident submarines designed to aid covert navigation. As the Cold War ended, the US Navy released the classified technology and opened the door for full commercialisation of the technology.

There are several types of Lockheed Martin gravity gradiometers currently in operation: the 3D Integrated Full Tensor Gravity Gradiometer (iFTG) and the Enhanced Full Tensor Gravity Gradiometer (eFTG); deployed in either a fixed wing aircraft or a vessel and the FALCON gradiometer (a partial tensor system rather than a full tensor system and deployed in a fixed wing aircraft or a helicopter).

The 3D FTG system contains three Gravity Gradiometry Instruments (GGIs), each consisting of two opposing pairs of accelerometers arranged on a spinning disc with measurement direction in the spin direction. The full gravity gradient tensor is sensed by an Umbrella Configuration of three rotating Gravity Gradiometry Instruments (GGIs).

Larmor Signal is a signal generated by the proton-resonance of optically pumped magnetometer sensors, which is proportional to the intensity of an external magnetic field. An electronic console converts the Lamor Signal into values of the total magnetic intensity in nanoteslas (nT) using a gyromagnetic ratio.

A Latitude Correction is applied to observed gravity data to compensate for the increase of the gravitational acceleration (attraction) from the value of about 978000 mGal at the Equator to about 98300 mGal at the Poles due to a variation of the Earth’s radius because of polar flattening and variation of the centrifugal force resulting from the Earth’s rotation, as the distance to the Earth’s axis varies with latitude.

Levelling is a procedure of adjusting the survey data so that they tie at line intersections. In aeromagnetic surveys, levelling is a general term for a variety of procedures applied to the recorded magnetic data to correct for the main distorting effects of diurnal variations, positioning errors, and miss-ties between traverse and tie lines.

Line Corrugations are artificial anomalies elongated in the direction of the traverse and/or tie lines. Line Corrugations represent residual errors remaining after standard levelling of the observed potential field data. Line Corrugations are removed by applying microlevelling techniques.

Line Spacing is a distance in meters between traverse or tie lines of the survey. Distance between traverse lines is one of the most important parameters of the airborne survey. For mineral exploration, the traverse line spacing is derived mainly from the target size such as an ore body or Kimberlite Pipe and can be about 100-150m. For petroleum explorations, the traverse line spacing is estimated as a function of the depth of a target interval at which anomalies are expected to be resolved. Often, the basement depth is taken as the reference level for line spacing value estimates.

Local Gravity is a residual component of the gravity field. Often, local Gravity is Bouguer Gravity from which the estimated regional component has been subtracted.

The Local Regional Component is a spatial wavelength component of the observed potential field data which is larger than that of the dominant wavelengths of the target anomalies, but smaller than the spatial wavelengths of the order of the survey area extent.

The tesla (symbol: T) is the unit of magnetic flux density (also called magnetic B-field strength) in the International System of Units (SI). The nanotesla: nT (one nanotesla equals 10−9 tesla), is commonly used in geophysical applications.

A magnetometer is an instrument that measures the magnetic field or magnetic dipole moment. Different types of magnetometers measure the direction, strength, or relative change of a magnetic field at a particular location. Magnetometers are widely used for measuring the Earth’s magnetic field, in geophysical surveys, to detect magnetic anomalies of various types.

Magnetometers assist mineral explorers both directly (i.e., gold mineralisation associated with magnetite, diamonds in kimberlite pipes) and, more commonly, indirectly, such as by mapping geological structures conducive to mineralisation (i.e., shear zones and alteration haloes around granites). In oil and gas exploration, the delineation of faults, shear zones, volcanics and other geological structures is important in de-risking reservoir and seal.

The magnetic analytic signal is a mathematical technique used in magnetic data processing and interpretation. It is employed to enhance the detection and delineation of magnetic anomalies associated with subsurface geological structures or magnetic sources.

Magnetic data collected in airborne or ground-based magnetic surveys often contain complex anomalies caused by various geological features, such as faults, dykes, ore bodies, and other magnetic sources. The magnetic analytic signal is a useful tool for highlighting the locations and edges of these magnetic sources, making them stand out from the background magnetic field.

The magnetic analytic signal effectively locates the derived anomaly over the magnetic source irrespective of the direction of magnetisation. It operates using Cartesian derivatives and is therefore very high-pass in nature and very much dominated by the shallower sources. The magnetic analytic signal has several important properties that make it valuable in geophysical interpretation

In the context of magnetic surveying or geophysical exploration, a Magnetic Base Station refers to a specific fixed location during a survey where the temporal Earth’s magnetic field is monitored for variations during magnetic surveys. These variations are caused by electric currents in the upper atmosphere are superimposed on the magnetic survey data. The magnetic base station data is used to correct the survey data for these variations. When the Magnetic Base Station records excessive temporal disturbances in the magnetic field the surveying is suspended until the magnetic field disturbances subside again to within acceptable limits.

The magnetic declination and inclination are essential properties of the Earth’s magnetic field. The predominant source of the magnetic field is the dynamo effect inside the planet. The dynamo effect is a naturally occurring phenomenon in which heat from the Earth’s core produces a series of electric currents, which creates a magnetic field. A three-dimensional vector represents the magnetic field of the Earth at any location. An ordinary compass is sufficient to measure the direction of the magnetic field.

Magnetic Declination of the Earth, or magnetic variation, is the angle formed between the magnetic north of the compass and the true geographical north. The value of the declination changes with location and time. It is represented by the letter D or the Greek alphabet δ.

The value of declination is positive if the magnetic north is along the east side of the true north and negative if the magnetic north is along the west side of the true north.

Isogonic Lines join points on the Earth’s surface which have a common declination value that is also constant. Agonic Lines are lines along with the value of declination is zero.

Magnetic Inclination, or Magnetic Dip, is the angle formed between the earth’s surface and the planet’s magnetic lines. The magnetic inclination can be observed when a magnet is trying to align itself with the earth’s magnetic lines.

Since the earth is not flat, the magnetic field lines are not parallel. Hence, the compass needle’s north end will be either upward (southern hemisphere) or downward (northern hemisphere). The degree of inclination varies with the location on the earth.

Magnetic reduction to pole and magnetic reduction to the equator are data processing techniques used in magnetic surveys, to transform the measured magnetic field data to equivalent values that would be observed if the measurements were made at the either magnetic poles or the magnetic equator, respectively. These transformations are applied to simplify the interpretation of the magnetic anomalies by transforming a dipolar anomaly in the absence of any remanent magnetisation, into a monopolar anomaly over the magnetic source (akin to a gravity anomaly).

In geophysics, a magnetic anomaly is a local variation in the Earth’s magnetic field resulting from variations in the chemistry or magnetism of the rocks. Mapping of these variations over an area is valuable in detecting structures obscured by overlying material.

Magnetic anomalies are generally a small fraction of the total magnetic field. The total field ranges from 25,000 to 65,000 nanoteslas (nT). To measure anomalies, magnetometers need a sensitivity of 10 nT or less. There are several types of magnetometers used to measure magnetic anomalies. Earthline Consulting uses proton precession magnetometers which measures the strength of the field but not its direction, so it does not need to be oriented. Each measurement takes a second or more. It is used in most ground surveys except for boreholes and high-resolution magnetic gradiometer surveys. For aeromagnetic surveys, Earthline Consulting uses Optically pumped magnetometers, which use alkali gases (most commonly rubidium and caesium) have high sample rates and sensitivities of 0.001 nT or less.

Airborne magnetometers detect the change in the Earth’s magnetic field using sensors attached to the aircraft in the form of a “stinger” or historically by towing a magnetometer on the end of a cable.

Magnetotellurics (MT) is an electromagnetic geophysical method for inferring the earth’s subsurface electrical conductivity from measurements of natural geomagnetic and geoelectric field variation at the Earth’s surface.

Investigation depth ranges from 100 m below ground by recording higher frequencies down to 200 km or deeper with long-period soundings. Proposed in Japan in the 1940s, and France and the USSR during the early 1950s, MT is now an international academic discipline and is used in exploration surveys around the world.

Commercial uses include hydrocarbon (oil and gas) exploration, geothermal exploration, carbon sequestration, mining exploration, as well as hydrocarbon and groundwater time lapse monitoring. Research applications include experimentation to further develop the MT technique for, sub-glacial water flow mapping, and earthquake precursor research.

MEMS Accelerometers are used in gradiometers to measure the gradient of the gravitational field. They are microscopic devices incorporating both electronic and moving parts. The are typically are constructed of components between 1 and 100 micrometres in size (i.e., 0.001 to 0.1 mm).

MEMS became practical once they could be fabricated using modified semiconductor device fabrication technologies, which include moulding and plating, wet and dry etching, electrical discharge machining and other technologies capable of manufacturing small devices. They merge at the nanoscale into nanoelectromechanical systems (NEMS) and nanotechnology.

MEMS accelerometers are increasingly present in portable electronic devices and video-game controllers, to detect changes in the positions of these devices.

Magnetometers based on helium-4 excited to its metastable triplet state thanks to a plasma discharge were first developed in the 1960s and 70s by Texas Instruments and from late 1980s by CEA-Leti. The latter pioneered a configuration which cancels the dead-zones, which are a recurrent problem of atomic magnetometers. This configuration was demonstrated to show an accuracy of 50 pT in orbit operation.

The European Space Agency (ESA) chose this technology for the Swarm mission, which was launched in 2013 to study the Earth’s magnetic field. An experimental vector mode, which could compete with fluxgate magnetometers was tested in this mission with overall success.

Magnetic gradiometers are pairs of magnetometers with their sensors separated, usually horizontally, by a fixed distance. The readings are subtracted to measure the difference between the sensed magnetic fields, which gives the field gradients caused by magnetic anomalies. This is one way of compensating both for the variability in time of the Earth’s magnetic field and for other sources of electromagnetic interference, thus allowing for more sensitive detection of anomalies. Because nearly equal values are being subtracted, the noise performance requirements for the magnetometers are extreme.